So I've been working on an open-source project for VRCHat for a couple of years now that aims to bring live DMX lighting into the game by encoding the data into pixels of a live stream. Combining this with our community-grown dedicated streaming service, VRCDN, we can use a relay type system to stream different parts of a live event (from music to VJ visuals, to finally DMX lighting) into a single video stream and send the data to VRChat (to control lights, shaders, and other properties) as one properly synced package. For this project, many of us use QLC+ extensively to run our shows as well as use it as a test bench for the development of the project.

"VR Stage Lighting is a year-long project that started out as a means to research and develop a performant/reliable way to send lighting data (including DMX512) to VRChat. It has evolved into creating a package of assets that can bring quality lighting effects in all manner of ways performantly.

This performance is provided through a standardized set of custom shaders that aim to avoid things such as real-time unity lights and using cost-saving measures such as GPU-instancing and batching."

Here is the GitHub link for more information. https://github.com/AcChosen/VR-Stage-Lighting

I'll be updating this thread periodically with different clips and updates about performances we do using QLC+ as well as other important developments that I think might seem interesting to you all.

For starters, I'll link some videos of my own event, the Orion Music Festival. An annual, 2-day music festival we host in VRChat showcasing VR Stage Lighting to its fullest.

Be warned, we're all doing this for fun and none of us are pros or even moderately experienced in any of this (truly we have no idea what we're doing), so I apologize if our lighting skills are a bit... rough looking.

Here are some clips from Orion Music Festival 2021:

Here is 2022:

Aftermovie:

This is what the DMX data looks like in the stream:

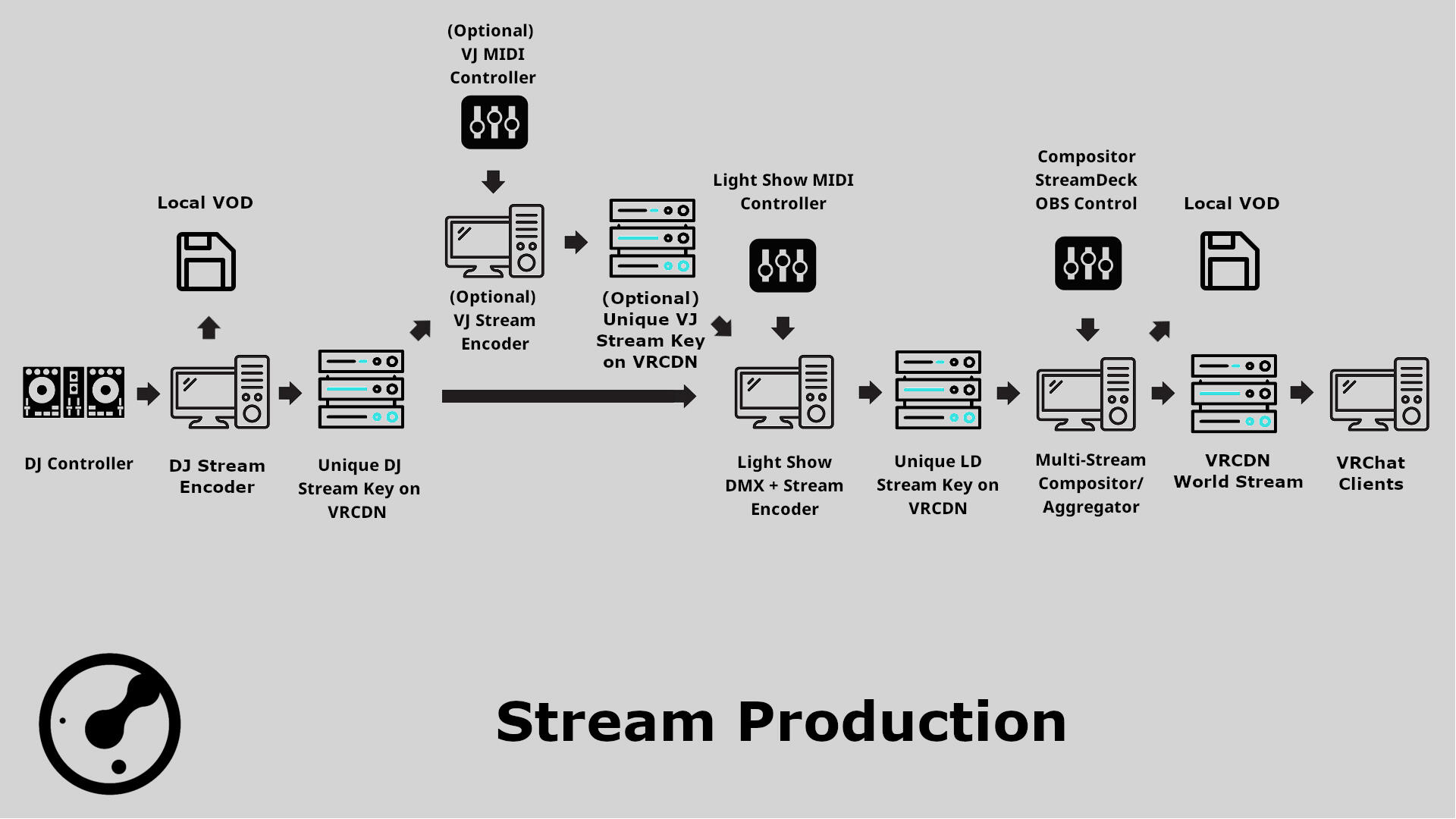

Diagram of the production pipeline

A picture of my own personal setup using QLC+ and TouchOSC along with a few midi controllers.

Here is a setup from one of our main camera directors (okay, he was technically the only one with any really true experience in any kind of live production)

Here is our website if you want more information on the specifics of the production behind the scenes including how the lighting, camera, and other aspects work in detail:

https://tech.orionvr.club/

I will be editing this thread over time with more clips and videos as well as any major updates we've discovered that I feel might be interesting to you all!